The more I read about how our brains evolved, the more I realize how spot on the NEAT is. NEAT evolves a network’s topology from scratch.1 It does this adding new neurons and connections on top of whatever structure existed before, or by mutating existing connections by shifting weights. The interesting thing is that by adding new parts, NEAT can use them and build from, mutate, or connect them to others. Here’s a visual example.

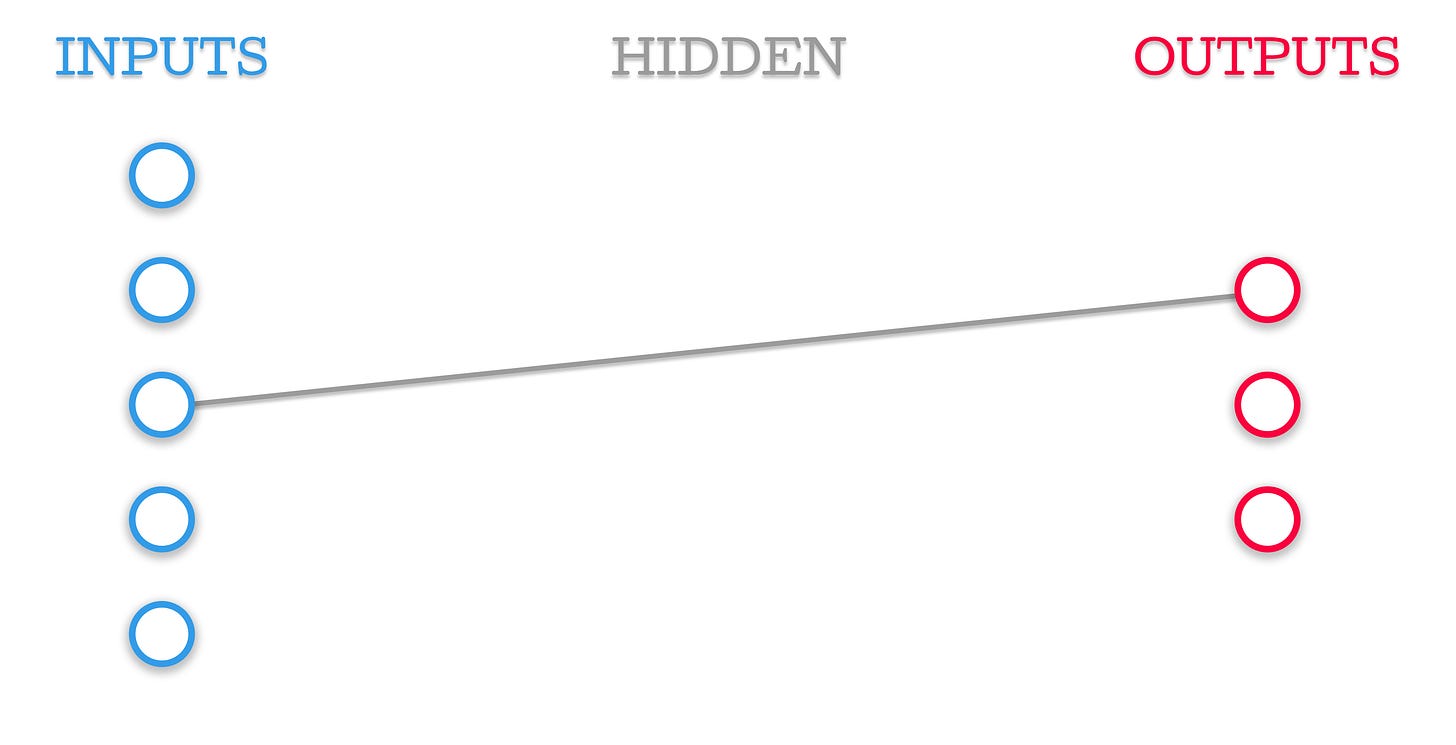

This is a network initialized with only input and output nodes.

This is exactly what a neural network initialized by the NEAT algorithm would look like. Through mutations, NEAT might add a connection between an input and an output.

Then mutate the connection by adding a neuron.

Then another connection.

Then another neuron!

Then perhaps another connection!

As you can see, as we mutate the network, NEAT can use the mutations and build from them. Here’s a theoretical example of a much more complex network.

What’s interesting is that as the networks evolve, the number of possible mutations NEAT can pick from increases. That number is what I call the evolution horizon, but I’m sure there’s another name for it.

To determine the evolution horizon on the first step of the network, we just need to determine the number of ways NEAT could connect an input to an output. In this case, there are 5 inputs and 3 outputs. Its quite simple, you just multiply inputs by outputs. E is the evolution horizon, I is the number of inputs, O is the number of outputs.

With more complex networks, the equation gets more complex as well, but that’s beside the point of this post.

The important point is that as the network evolves, the evolution horizon expands exponentially. The number of possibilities for the network expands as the network becomes more complex. So the more complex the network is, the more complex it can become. It’s like a loop.

This is different to Neural-Architecture Search (NAS) because NAS starts by finding an architecture, then optimizing it.2 The problem with this approach is that to find a structure which we can optimize well for a task, we would need the full evolution horizon. In the case of developing a human brain, the evolution horizon is way too large to optimize.

For example, in the grand scheme of verbal communication among animals on earth, humans have a very complex approach. The intricacies of our languages evolved with our control and the range of the sounds we could produce. As Neanderthals, our language capabilities were much more limited. It practically boiled down down to grunting and pointing.

As our Supralaryngeal Vocal Tract grew to be more sophisticated (and allows us to create a wider range of sounds, i.e more outputs)3 the evolution horizon of our language expanded.

As our capacity for language increased, so did the complexity. This is what I want to replicate in a few weeks. The process of slowly building up the foundations instead of taking one big step is what I want to achieve. Without good foundations, I won’t be able to get anywhere.

To wrap up this post, I’m leaving you with a poll. I’m curious to see what the results will be. See you all next week! 👋

Yes I am thinking that disconnecting is not the issue but if a leared mechanism to auto adjust to shut off redundancies could be practical. Sort of learned self optimalization.

But before even considering such developing is more the key.

Still such if at a point were possible it would be one hell of a leap forward, even improving on organic models.

But I get ahead of myself.

A thought has occured to me.

You discuss building an ever increasing complexity of connections. Has thought been given to the extinction of what inevitably will become, in some cases, redundant connections? This could streamline process and reduce power consumption.

In organic intelligence the extinction of neural connections is incredibly slow, hence one of the key issues in addiction recovery for example. Unlearning is hard, much harder than learning but oft is required. Could an ability to maximize efficiecy by discarding redundant connections be built in? But, perhaps this is putting the cart before the horse, or indeed the mere concept of an embryonic horse to be a more accurate metaphore.